General Paul Selva issued a warning to lawmakers about allowing the military to advance artificial intelligence-powered weapons systems for use in warfare.

During Tuesday’s Senate Armed Services Committee hearing, Senator Gary Peters questioned Vice Chairman of the Joint Chiefs of Staff and Air Force General Paul Selva regarding a Defense Department directive that forbids autonomous weapons systems from taking lives without clear control of the decision by a human operator.

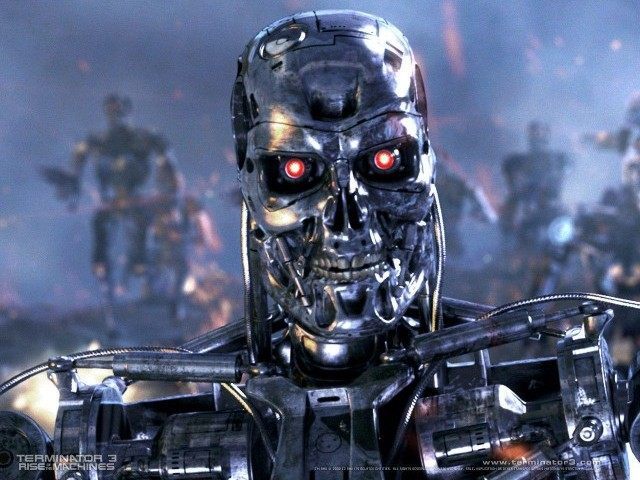

Selva was adamant that the U.S. military “keep the ethical rules of war in place, lest we unleash on humanity a set of robots that we don’t know how to control.” Selva further stated that he “[doesn’t] think it’s reasonable for us to put robots in charge of whether or not we take a human life.”

Peters was not so easily dissuaded, noting that the directive will expire within the year and arguing that “our adversaries often do not consider the same moral and ethical issues that we consider each and every day.”

Selva remained firm. He asserted that we as Americans should “always take our values to war,” while admitting that there would no doubt be a “raucous debate in the department about whether or not we take humans out of the decision to take lethal action.” Nevertheless, he is staunchly in favor of “keeping that restriction.”

But, according to the Selva — the second highest ranking military officer as the Vice Chairman of the Joint Chiefs of Staff — choosing not to employ autonomous killers has nothing to do with his views on defending against them. Much like chemical warfare or nuclear weapons, it “doesn’t mean that we don’t have to address the development of those kinds of technologies and potentially find their vulnerabilities and exploit those vulnerabilities.”

General Selva’s concerns echo those of Tesla founder Elon Musk, as well as acclaimed astrophysicist Stephen Hawking. Their 2016 open letter, published on the Future of Life Institute, acknowledged AI’s “great potential to benefit humanity in many ways” but called a “military AI arms race” a “bad idea” that “should be prevented by a ban on offensive autonomous weapons beyond meaningful human control.”

Follow Nate Church @Get2Church on Twitter for the latest news in gaming and technology, and snarky opinions on both.

COMMENTS

Please let us know if you're having issues with commenting.