Over 50 AI researchers from 30 countries have agreed to boycott the Korea Advanced Institute of Science and Technology over concerns with its weapons research.

The researchers have signed a letter expressing their concern with KAIST’s decision to support the development of artificially intelligent weapons systems. “At a time when the United Nations is discussing how to contain the threat posed to international security by autonomous weapons, it is regrettable that a prestigious institution like Kaist looks to accelerate the arms race to develop such weapons,” it reads.

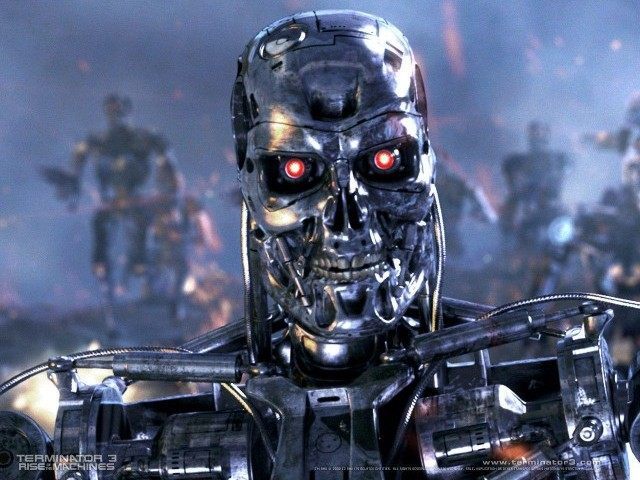

The letter calls autonomous weapons the “third revolution in warfare” which will “permit war to be fought faster and at a scale greater than ever before” and are “potential weapons of terror.” They assert that “despots and terrorists could use them against innocent populations, removing any ethical restraints,” calling such weapons a “Pandora’s box” which will be “hard to close if it is opened.”

In response, KAIST President Shin Sung-chul said that the university is “significantly aware of ethical concerns in the application of all technologies including artificial intelligence” and that they will focus on developing “efficient logistical systems, unmanned navigation and aviation training systems.” According to Sung-chul, “KAIST will not conduct any research activities counter to human dignity, including autonomous weapons lacking meaningful human control.”

Professor Noel Sharkey, head of the Campaign to Stop Killer Robots, says that after receiving the university’s response, “the signatories of the letter will need a little time to discuss the relationship between KAIST and Hanwha before lifting the boycott.” Until that time, those involved will refuse to cooperate in any manner with the school.

This is far from the first time prominent scientists and researchers alike have voiced their concern regarding this next leap in killer technology. The Human Rights Watch and Harvard Law School lamented the lack of accountability in autonomous weapons in a 2015 report, urging a ban on such warfare similar to the one against chemical weaponry. Another letter by late physicist Stephen Hawking, as well as Elon Musk and Apple co-founder Steve Wozniak, was signed by thousands of researchers concerned over those same developments. Musk and Hawking, in particular, have continued that campaign into the present.

And with entities like the Kalashnikov group already demonstrating precisely the sort of technology our brightest minds have warned us about, it may very well be time to take a closer look at the price of our advancement — and how to ensure that it is not used to destroy its creators.

COMMENTS

Please let us know if you're having issues with commenting.