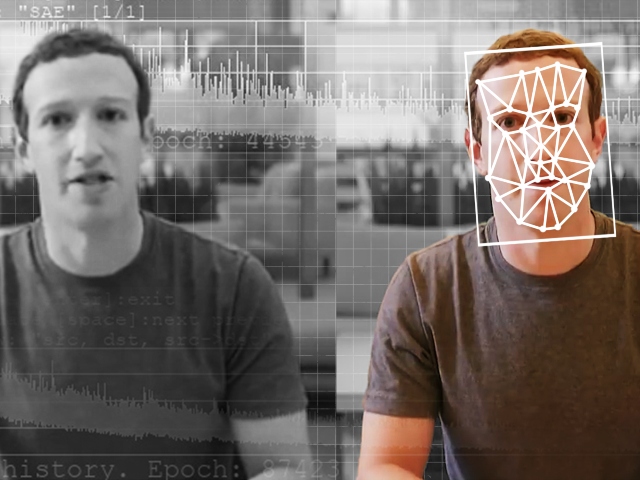

The Federal Trade Commission (FTC) has awarded prizes to four organizations for developing technologies that can distinguish between authentic human speech and audio generated by AI, as concerns grow over the influence of deepfakes on elections and consumer scams.

NPR reports that the winners of the FTC contest showcase a variety of approaches to detecting AI-generated voices, also known as audio deepfakes. OriginStory, created by researchers at Arizona State University, uses sensors to detect human characteristics such as breathing, motion, and heartbeat information. “We have this very unique speech production mechanism. And so by sensing it in parallel with the acoustic signal, you can verify that the speech is coming from a human,” explained Visar Berisha, a professor at ASU’s College of Health Solutions and part of the winning team.

Another winner, DeFake, created by Ning Zhang from Washington University in St. Louis, injects data into recordings of real voices to prevent AI-generated voice clones from sounding like the real person. This technology was inspired by earlier tools developed by University of Chicago researchers that place hidden changes in images to prevent AI algorithms from mimicking them.

AI Detect, made by startup Omni Speech, uses AI to catch AI. CEO David Przygoda explains that their machine learning algorithm extracts features from audio clips, such as inflection, and uses that to teach the models to differentiate between real and fake audio. “They have a really hard time transitioning between different emotions, whereas a human being can be amused in one sentence and distraught in the next,” Przygoda notes.

While there are existing commercial detection tools that rely on machine learning, factors like sound quality and media format can make them less reliable. Berisha believes that AI-based detectors will become less effective over time, similar to the challenges faced by detectors of AI-generated text. This led him to develop a process that authenticates human voices as words are being spoken.

Read more at NPR here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship.

COMMENTS

Please let us know if you're having issues with commenting.