Twitter revealed it will be banning “hateful display names” and published a timeline for future online safety policy changes.

TechCrunch reports that Twitter has published a timeline of changes they plan to make to their Safety features, including the banning of “hateful display names,” the implementation of “witness reporting,” and changes to the content allowed on their platform. Twitter also apologized for its previous failure to prevent abuse on the platform: “Far too often in the past we’ve said we’d do better and promised transparency but have fallen short in our efforts.”

Twitter plans to ban “hateful display names,” and “nameflaming,” a method of ridicule where users will change their username to mock another Twitter user. Twitter also plans to better analyze the relationship between victims and “abusers” on Twitter to ensure that abuse reports are genuine and not a concerted attempt to shut down an alleged abuser’s account over a disagreement. Twitter will also send notifications to third-party reporters of abuse on the platform in an attempt to make users feel safer.

Twitter also plans to ban “violent group” and “hate symbols” on their platform. Such images will not be allowed in avatars or on profile banners and “sensitive content” warnings will be added to any questionable material posted in tweets. Twitter also plans to work on stopping spam from spreading on the platform as well as using account relationship signals on user profiles to determine whether or not sexual advances are welcome.

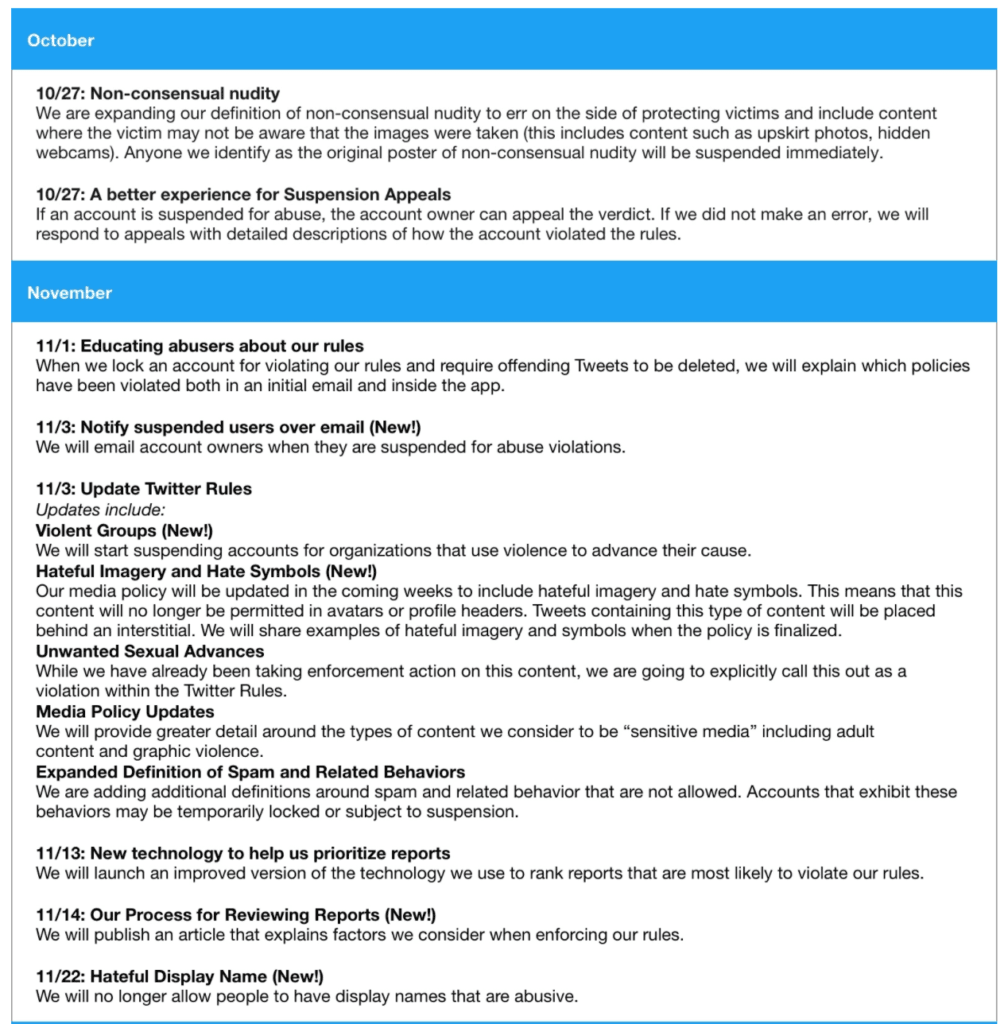

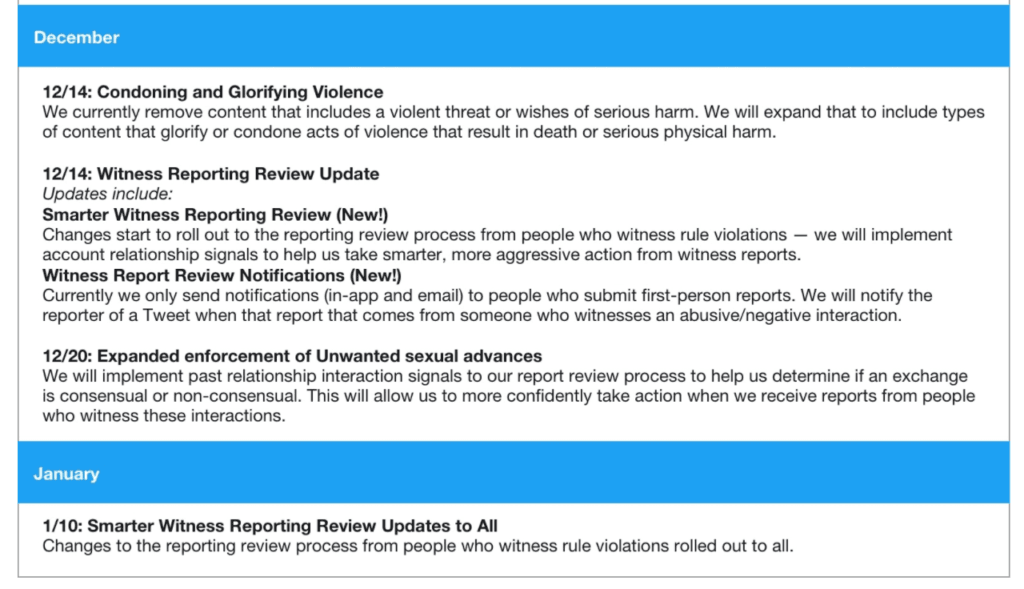

A full timeline for the rollout of these updates can be found below:

Speaking to Wired earlier this week, a Twitter representative said, “Although we planned on sharing these updates later this week, we hope our approach and upcoming changes, as well as our collaboration with the Trust and Safety Council, show how seriously we are rethinking our rules and how quickly we’re moving to update our policies and how we enforce them.”

Twitter’s head of policy wrote in an internal email, “We realize that a more aggressive policy and enforcement approach will result in the removal of more content from our service. We are comfortable making this decision, assuming that we will only be removing abusive content that violates our Rules. To help ensure this is the case, our product and operational teams will be investing heavily in improving our appeals process and turnaround times for their reviews.”

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship. Follow him on Twitter @LucasNolan_ or email him at lnolan@breitbart.com

COMMENTS

Please let us know if you're having issues with commenting.