Recently, Apple announced a new addition to its upcoming iOS 15 and iPadOS 15 firmware for iPhones and iPads. The new feature will allow Apple to scan user photos stored in Apple’s iCloud service and determine if they contain sexually explicit images involving children. Following a blowback against the Masters of the Universe scanning the devices of its customers, the company is now promising it will not abuse the feature or allow governments to dictate what types of data iPhones are scanned for.

Apple claims that the way it detects CSAM (Child Sexual Abuse Material) is “designed with user privacy in mind,” and it is not directly accessing iCloud users’ photos but rather utilizing a device-local, hash-based lookup and matching system to cross-reference the hashes of user photos with the hashes of known CSAM.

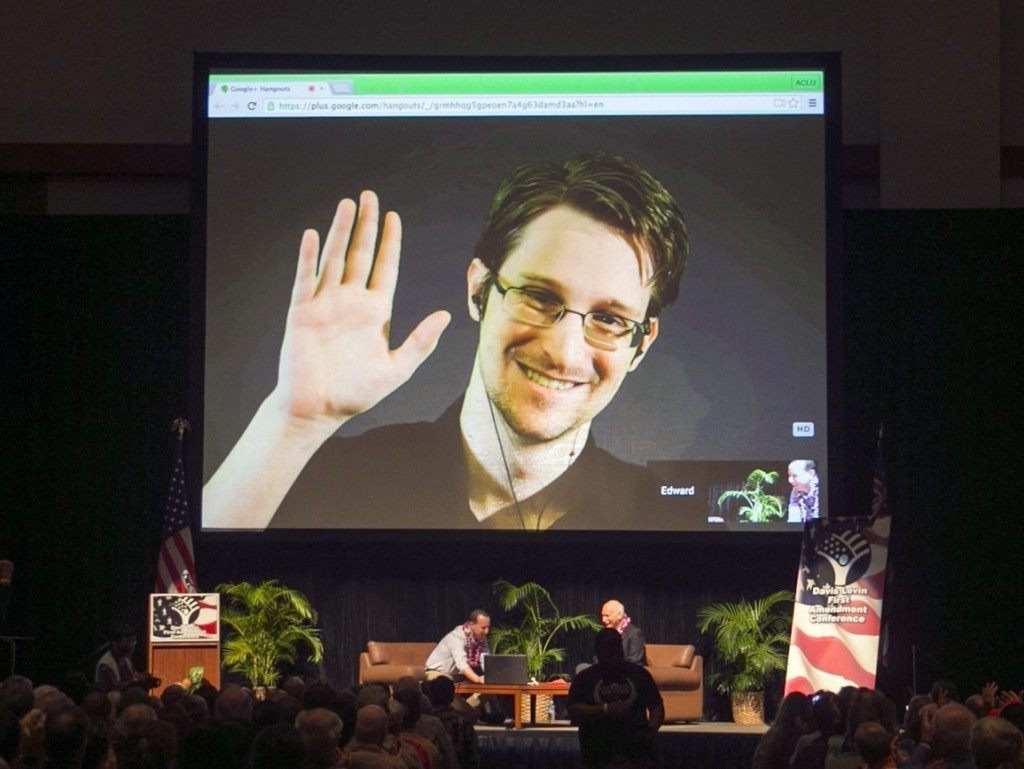

However, many privacy experts are apprehensive about the new system. NSA whistleblower Edward Snowden tweeted about the issue stating: “No matter how well-intentioned, @Apple is rolling out mass surveillance to the entire world with this. Make no mistake: if they can scan for kiddie porn today, they can scan for anything tomorrow.”

Snowden was not the only privacy expert to raise concerns over the issue; the Electronic Frontier Foundation wrote: “Even a thoroughly documented, carefully thought-out, and narrowly-scoped backdoor is still a backdoor. We’ve already seen this mission creep in action. One of the technologies originally built to scan and hash child sexual abuse imagery has been repurposed to create a database of ‘terrorist’ content that companies can contribute to and access for the purpose of banning such content.”

Now, Apple is attempting to reassure users that their privacy will remain intact with the implementation of this new feature. The Verge reports that Apple has stated that it will not be influenced by foreign governments to use the new tech to violate user privacy.

“Let us be clear, this technology is limited to detecting CSAM [child sexual abuse material] stored in iCloud and we will not accede to any government’s request to expand it,” the company stated.

Apple claims that its system “only works with CSAM image hashes provided by NCMEC (National Center for Missing and Exploited Children) and other child safety organizations.”

Apple added: “We have faced demands to build and deploy government-mandated changes that degrade the privacy of users before, and have steadfastly refused those demands. We will continue to refuse them in the future.”

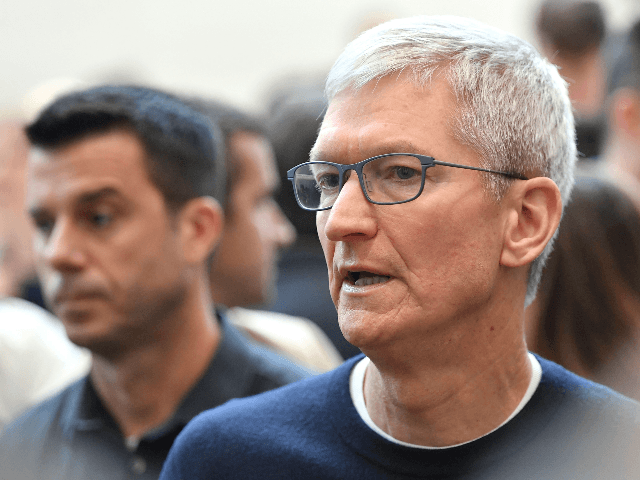

Apple CEO Tim Cook waves as he arrives for the Economic Summit held for the China Development Forum in Beijing on March 23, 2019. (Photo by Ng Han Guan / POOL / AFP) (Photo credit should read NG HAN GUAN/AFP via Getty Images)

However, the ease at which Apple could use this technology to search for any type of image remains a point of contention for many. The EFF stated: “All it would take to widen the narrow backdoor that Apple is building is an expansion of the machine learning parameters to look for additional types of content, or a tweak of the configuration flags to scan, not just children’s, but anyone’s accounts.”

Breitbart News senior technology correspondent Allum Bokhari wrote in a recent article:

Is stopping child abuse really the ultimate intention? Since Apple first entered the smartphone market in 2007, it has cultivated an image of ultra-privacy, making this move even more curious.

Unlike its competitor Google, Apple’s business model does not rely on mass data collection to turn a profit. Instead, the company makes its money from charging premium prices for its hardware and taking a big cut of app store revenue.

Everyone knows Google scans your Android devices for every scrap of data it can acquire, but Apple has deliberately avoided top-down scanning of devices. Until now.

Are we to believe that after 14 years, Apple has suddenly recognized the problem of child abuse imagery on the internet? And that its recognized it to such an extent that it’s watered down one of its biggest selling points over Google?

Read more at the Verge here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship. Follow him on Twitter @LucasNolan or contact via secure email at the address lucasnolan@protonmail.com

COMMENTS

Please let us know if you're having issues with commenting.