The world of robocalling is evolving, with generative AI now enabling scammers to mimic the voices of people we know, making fraudulent calls seem more trustworthy and heightening the risk of successful scams.

CNBC reports that robocalls have long been a nuisance, interrupting our days with misleading offers or fraudulent threats. But a new era has dawned in the realm of robocalling, one where the voices on the other end of the line might sound eerily familiar. Generative AI has entered the battlefield, armed with the capability to mimic the voices of our friends, family, or colleagues, making the deceptive calls more convincing and dangerous.

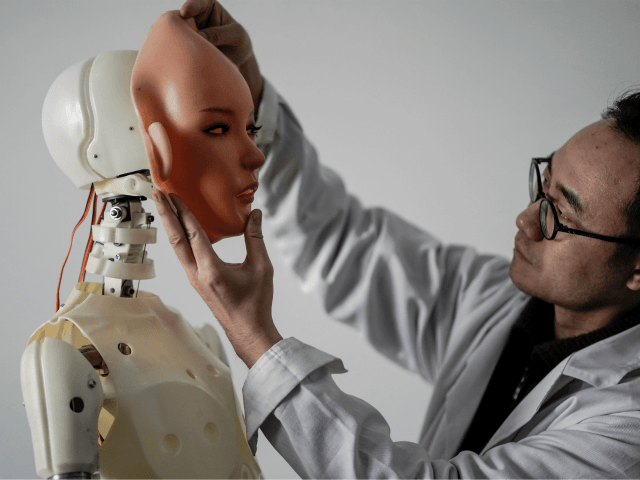

“Generative artificial intelligence is increasingly being used to mimic the voice of someone you know and communicate with you in real time,” said Jonathan Nelson, director of product management at telephony analytics and software company Hiya Inc. This evolution in technology has eroded the traditional trust that people used to have in phone calls, making every ring a potential risk.

The technological advancements in AI have made it easier for scammers to create interactive voice response (IVR) scams, commonly known as “vishing” (voice phishing). “Generative AI can kind of take what used to be a really specialized spam attack and make it much more commonplace,” Nelson explained. This new method of scamming has become more prevalent due to the widespread sharing of voices online, allowing scammers to harvest the necessary data to craft their deceptive calls.

Breitbart News reported earlier this month on how such scams play out. In one recent version, parents were targeted by calls using the AI-generated voices of their children to beg for help:

The New York Post reports that residents of the Upper West Side of New York City have been left in a state of shock and fear as scammers deploy AI to simulate the voices of their children, creating realistic and distressing scenarios. One mother recounted her harrowing experience, where she received a call from what she believed was her 14-year-old daughter, crying and apologizing, claiming she had been arrested. The voice was so convincing that the mother was prepared to hand-deliver $15,500 in cash for bail, believing her daughter had rear-ended a pregnant woman’s car while driving underage.

The scam was revealed when her actual daughter, who was in school taking a chemistry exam, contacted her to let her know she was safe. Reflecting on the incident, the mother stated, “I’m aware it was really stupid – and I’m not a stupid person – but when you hear your child’s voice, screaming, crying, it just puts you on a different level.”

Read more at CNBC here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship.

COMMENTS

Please let us know if you're having issues with commenting.