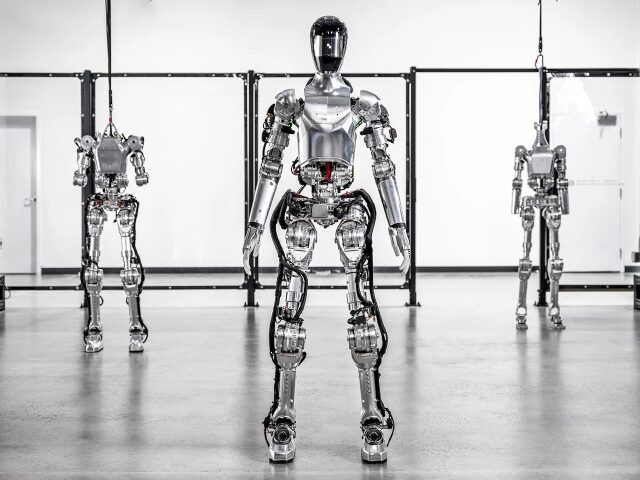

A robotics startup named Figure has showcased a humanoid robot that integrates OpenAI’s advanced language technology, enabling it to engage in real-time conversations and perform tasks simultaneously.

Decrypt reports that Figure, a robotics company, has unveiled its latest creation – a conversational humanoid robot infused with OpenAI’s cutting-edge artificial intelligence technology. The robot, dubbed “Figure 01,” has the ability to understand and respond to human interactions instantaneously, thanks to the integration of OpenAI’s powerful language models.

The company’s recent partnership with OpenAI has brought high-level visual and language intelligence to its robots, allowing for “fast, low-level, dexterous robot actions.” This synergy between advanced AI and robotics has resulted in a robot that can not only converse with humans but also carry out tasks and multitask seamlessly.

Breitbart News previously reported that Figure has gathered high profile support including investments by Jeff Bezos and Nvidia.

In a video demonstration released by Figure, the Figure 01 robot can be seen interacting with its creator’s Senior AI Engineer, Corey Lynch, who puts the robot through a series of tasks and questions in a simulated kitchen environment. The robot effortlessly identifies objects like an apple, dishes, and cups, and when asked to provide something to eat, it promptly offers the apple, showcasing its ability to understand and act upon commands.

We are now having full conversations with Figure 01, thanks to our partnership with OpenAI.

Our robot can:

– describe its visual experience

– plan future actions

– reflect on its memory

– explain its reasoning verbally

Technical deep-dive :pic.twitter.com/6QRzfkbxZY— Corey Lynch (@coreylynch) March 13, 2024

Furthermore, Figure 01 can collect trash into a basket while simultaneously engaging in conversation, highlighting its multitasking capabilities. According to Lynch, the robot can describe its visual experiences, plan future actions, reflect on its memory, and explain its reasoning verbally – a feat that would have been unimaginable just a few years ago.

The key to Figure 01’s conversational prowess lies in the integration of OpenAI’s multimodal AI models. These models can understand and generate different data types, such as text and images, allowing the robot to process visual and auditory inputs, and respond accordingly. Lynch explained that the model processes the entire conversation history, including past images, to generate language responses, which are then spoken back to the human via text-to-speech.

The debut of Figure 01 has sparked a major response on social media, with many impressed by the capabilities of the robot and some even drawing comparisons to science fiction scenarios. However, for AI developers and researchers, Lynch provided valuable technical insights, stating that all behaviors are driven by neural network visuomotor transformer policies, mapping pixels directly to actions.

Read more at Decrypt here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship.

COMMENTS

Please let us know if you're having issues with commenting.