In a throwback to the antebellum era, popular photo-sharing services, from Flickr and Google Photos, have publicly come under assault for their software algorithms tagging photos of black people as gorillas and apes. But this just highlights the growing risk that “learning machines” may learn to develop human biases and even criminal tendencies.

The San Francisco Chronicle reported that when professional photographer Corey Deshon, who is black, uploaded to Flickr a digital headshot of a black man, the image was tagged as “ape.”

Jacky Alciné, who is also black, was similarly insulted when he uploaded pictures of himself and a black friend on Google Photos and was tagged as “gorillas,” instead of human beings. When Alciné later rummaged through his library of photos, he found dozens that had also been tagged as “gorilla.”

Alciné, who is a well-known Web developer in the Bay Area, made the issue viral when he tweeted, “Google Photos, y’all f**ked up. My friend’s not a gorilla”. He told the Chronicle, “It’s inexcusable,” and “there’s no reason why this should happen.”

Google holds itself out as a world leader in developing artificial intelligence through “machine learning.” The company apologized very publicly on Twitter for the blunder and said they are tweaking their algorithms for facial recognition to fix the problem.

“We’re appalled and genuinely sorry that this happened,” a Google’s spokeswoman said on the company’s website. “There is still clearly a lot of work to do with automatic image labeling, and we’re looking at how we can prevent these types of mistakes from happening in the future.”

Google’s chief architect of social, Yonatan Zunger, replied on Twitter to Alciné’s anger, “Lots of work being done, and lots still to be done. But we’re very much on it.” He added that Google is specifically working to be more careful in assigning tags according to their skin tones and bone features. He also said Google spiked the “gorilla” category.

Google’s staff acknowledged that incorporating more pictures of real gorillas into Google’s machine learning system would help avoid the PR disaster. But historically, the company has released software and asked users to help them “perfect” the product.

Google launched a YouTube kids app this year that failed miserably, despite employing automated filters, direct user feedback, and manual reviews to prevent “adult content” from being displayed to minors. Google also apologized for that fiasco, but then commented that it is “nearly impossible to have 100% accuracy” in machine learning.

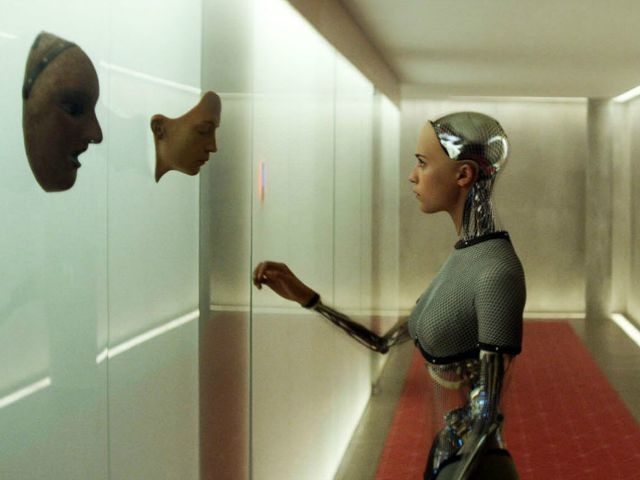

The “gorilla” fiasco highlights the risks of reliance on machine learning. Google and Apple are both investing billions of dollars in developing autonomous self-driving cars that are using machine learning on public roads to “recognize” the objects and then “decide” whether it is optimal to continue, avoid, or stop.

This learning function creates a risk that a machine may be “brain-washed” or sabotaged by hacking. Charlie Miller and Chris Valasek made a video last week of their predatorily unannounced “car-hack” of a late-model Chrysler Jeep Cherokee being driven at 70 miles an hour by Wired magazine senior writer Andy Greenberg.

Greenberg said he was speeding along a busy interstate freeway outside of St. Louis when he began to lose control of his vehicle while 18-wheelers whizzed by. First, all the air-conditioning fans turned on high, followed by photos showing up on his navigation unit, then music blasting, and constant windshield washing that could not be turned down. But Greenberg went into panic mode when his car accelerator stopped as he tried to move out of the way of oncoming traffic. He eventually ended up in a ditch on the side of the road.

The hack exploited the cellular connection to the UConnect Internet software that is a standard Cherokee feature. Chrysler recalled 1.4 million vehicles Friday to fix the bug.

The most dangerous element of “machine learning” is human input. If the software developers inadvertently load mostly white photos into a facial recognition software, the machine may “learn” to have a bias against recognizing black people. But if racists, criminals, or sociopaths gain access to machines that are “learning,” they could “teach” our own machines to turn against us.

COMMENTS

Please let us know if you're having issues with commenting.

*** This article has also been posted to Breitbart's Facebook page. Join the conversation on Facebook.