The National Highway Traffic Safety Administration (NHTSA) has issued a special order to Tesla, asking Elon Musk’s car company to provide comprehensive data on a lesser-known feature called “Elon Mode” in its Autopilot systems.

CNBC reports that the NHTSA has formally requested Tesla to share details about its “Elon Mode”—a configuration that allows drivers to bypass the standard safety prompts in Tesla’s driver assistance systems. This order comes amid growing concerns about the safety implications of such features.

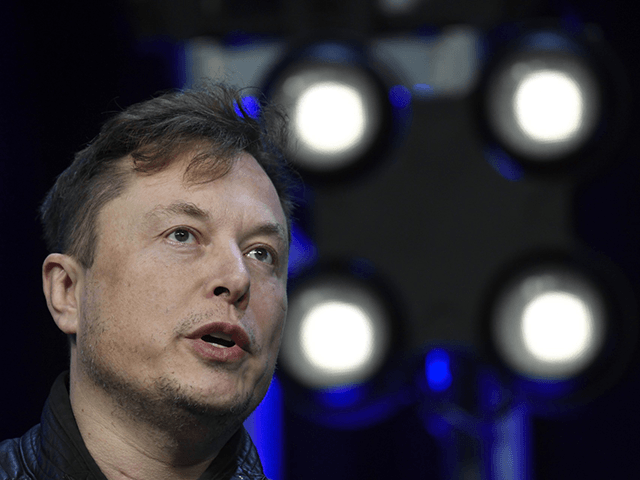

FREMONT, CA – SEPTEMBER 29: Tesla CEO Elon Musk steps out of the new Tesla Model X during an event to launch the company’s new crossover SUV on September 29, 2015 in Fremont, California. After several production delays, Elon Musk officially launched the much anticipated Tesla Model X Crossover SUV. The (Photo by Justin Sullivan/Getty Images)

“NHTSA is concerned about the safety impacts of recent changes to Tesla’s driver monitoring system,” said the agency’s acting chief counsel John Donaldson. “This concern is based on available information suggesting that it may be possible for vehicle owners to change Autopilot’s driver monitoring configurations to allow the driver to operate the vehicle in Autopilot for extended periods without Autopilot prompting the driver to apply torque to the steering wheel.”

Typically, Tesla’s Autopilot and Full Self-Driving (FSD) systems require drivers to periodically engage with the steering wheel to confirm they are attentive. Failure to do so triggers a series of warnings, commonly referred to as “nags,” that escalate in urgency. However, with “Elon Mode” activated, these warnings are effectively disabled, raising questions about the potential for misuse and the resulting safety risks.

Automotive safety researcher and Carnegie Mellon University associate professor Philip Koopman weighed in on the matter, stating, “It seems that NHTSA takes a dim view of cheat codes that permit disabling safety features such as driver monitoring. I agree. Hidden features that degrade safety have no place in production software.”

The NHTSA’s request for information is part of a broader investigation into Tesla’s driver assistance technologies, which have been involved in a series of accidents. The agency has yet to conclude its investigations into these incidents, including “fatal truck under-run crashes” and collisions involving stationary first responder vehicles.

Breitbart News previously reported on the investigation:

The NHTSA said this week that it is widening the scope of the investigation into the effectiveness of Tesla’s driver assistance system. The agency will now be reviewing information from 830,000 Tesla cars and 200 new cases of crashes involving Tesla cars utilizing the Autopilot function.

The NHTSA said that it is treating the investigation as an “Engineering Analysis,” which is necessary before possibly issuing a recall of cars fitted with the Autopilot feature. According to a press release, the NHTSA’s expanded probe will “explore the degree to which Autopilot and associated Tesla systems may exacerbate human factors or behavioral safety risks by undermining the effectiveness of the driver’s supervision.”

The agency has added six more crashes involving first-responder vehicles to its analysis of Tesla’s Autopilot function since launching the probe ten months ago. The agency said that it found that Tesla’s warning system only activated moments before colliding with another vehicle. The agency added that the “Automatic Emergency Braking” only activated in about half of crashes. “On average in these crashes, Autopilot aborted vehicle control less than one second prior to the first impact,” the agency’s press release stated.

For years, Tesla has maintained that its Autopilot and FSD systems are “level 2” driver assistance technologies and not fully autonomous systems, despite marketing that could suggest otherwise. This latest development adds another layer of complexity to the ongoing debate about the safety and regulation of self-driving technologies.

Read more at CNBC here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship. Follow him on Twitter @LucasNolan

COMMENTS

Please let us know if you're having issues with commenting.