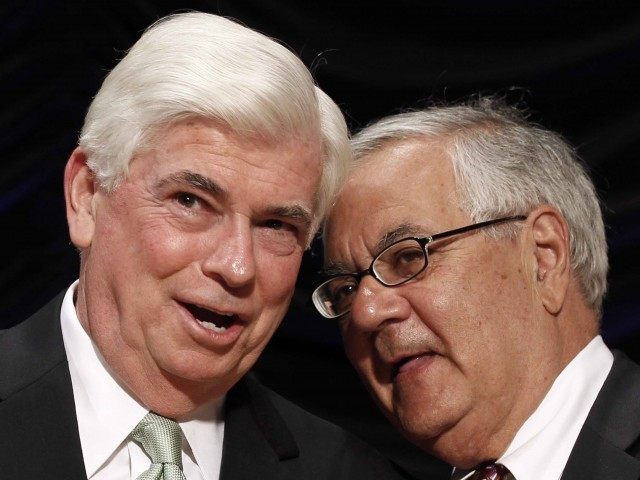

The Obama Administration’s Dodd-Frank Act, passed early in his first term to supposedly punish Wall Street, has had the adverse effect of actually making billions for Wall Street hedge funds by cutting in half the percentage of Americans that could qualify for a mortgage.

With Democrats in filibuster-proof control of the both houses of Congress after their 2008 election sweep, the Obama Administration bludgeoned through the ‘Dodd-Frank Wall Street Reform and Consumer Protection Act’ (Dodd-Frank Act) to supposedly punish Wall Street and expand lending to average Americans. In a back-hand at conservatives, Obama hosted the signing of one of the biggest expansions of the federal regulatory state at the Ronald Reagan Office Building in Washington D.C. The President heaped praise on the legislation at the highly promoted ceremony:

“With this law, we’ll crack down on abusive practices in the mortgage industry. We’ll make sure that contracts are simpler -– putting an end to many hidden penalties and fees in complex mortgages -– so folks know what they’re signing.”

But the real impact of the legislation was to punish the average American interested in buying a home by driving up the average FICO credit score required to qualify for a mortgage from about 710 to a prohibitive 770, according to Bloomberg.

As a result of the new standards, Dodd-Frank’s regulatory structure slashed the percentage of individual Americans that could qualify for a mortgage from 52 percent before President Obama to 26 percent afterwards. The National Association of Realtors® noted that five years after the law’s passage, the percentage of first-time-home-buyers had plunged to the “lowest point in nearly three decades and is preventing a healthier housing market from reaching its full potential.”

The only class of Americans that benefited from Dodd-Frank was Wall Street multi-billion dollar hedge funds and private equity firms. They lobbied for the Federal Reserve to drastically cut interest rates and for Congress to write Dodd-Frank’s rules to make it easier for banks to lend huge sums to institutional borrowers. While mortgage debt shriveled over the next five years, Wall Street securities lending skyrocketed by over 150 percent as mostly institutional investors gorged on really cheap loans.

Unlike prior U.S. real estate crashes where first time buyers tended to flock in as new buyers after prices crash, the dramatically higher lending requirements forced more home liquidations and foreclosures as existing borrowers were unable to qualify for refinancing, despite the Fed radically lowering interest rates.

Hedge funds and other institutional buyers swept up huge numbers of distressed properties and converted them to rentals. RealtyTrac property research documented that hedge funds bought at least 386,000 single-family homes across the United States during the Obama Administration and have turned most into rentals.

Hedge fund buying was most significant in cities like Phoenix, Las Vegas, Atlanta and parts of Southern California, where housing prices plunged the most during the financial crisis. The New York Times reported “that institutional buyers have crowded out first-time buyers” and Bloomberg reported that institutional investors buying has “fueled double-digit recoveries” in home prices.

The Blackstone Group L.P, a Wall Street multinational private equity firm, is believed to be the largest acquirer of single family homes for rent. They borrowed almost $8.6 billion to buy 45,000 homes in 14 cities. In the first couple of years after Dodd-Frank, they averaged buying about 17 homes a day.

Other Wall Street players that got in on the game of borrowing cheap Fed cash to convert single-family homes into rentals included Och-Ziff Capital Management Group, Lennar Group, Oaktree Capital Management, Starwood Capital Management and American Homes 4 Rent.

This explains why during the Obama Administration, the home ownership rate for all Americans plunged from 67.4 percent to 63.4 percent, the lowest point since 1965.

But during the same six year period, hedge funds have made about a $1 trillion in profit by buying up single-family homes. Now despite some of the lowest inflation in American history, hedge funds are now pushing rent increases in Phoenix, Las Vegas, Atlanta and parts of Southern California at about 5 percent, double the national average.

COMMENTS

Please let us know if you're having issues with commenting.