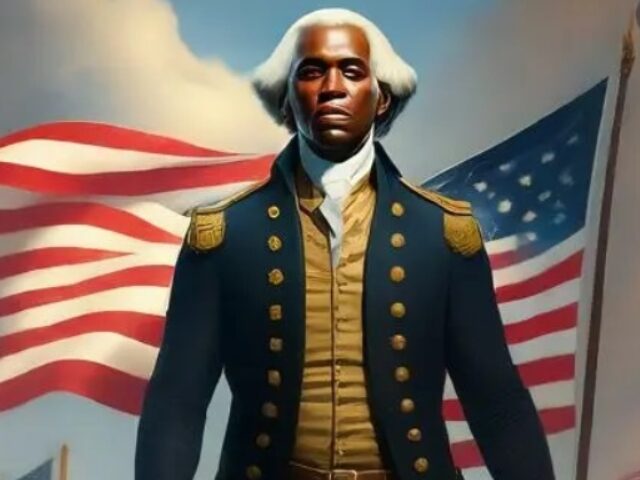

Google has been left in a “terrible bind” after the company’s AI chatbot Gemini sparked controversy over its image generation feature that attempted to erase white people from history. Facing immense backlash and mockery from every corner of the internet, the Masters of the Universe are desperately working to fix the system, which one AI expert has mockingly labeled a “stochastic parrot.”

Breitbart News previously reported that Google’s market value plunged by $90 billion amid controversies surrounding its new generative AI service Gemini. The ultra-woke AI became instantly famous for erasing white people from history, facing widespread mockery for not only its wildly inaccurate images, but also defending pedophilia and Joseph Stalin.

Google CEO Sundar Pichai speaks during Google for India 2022 event in New Delhi, Monday, Dec. 19, 2022. The annual event kicked off with company executives announcing various new products, projects and developments. (AP Photo/Manish Swarup)

Many media outlets quickly noted that Gemini seemed to be deliberately biased against white people, citing the ahistorical images as proof. Elon Musk, who recently acquired Twitter, also noted the issue and singled out Google leaders like Gemini product lead Jack Krawczyk who has a history of tweeting out his anger against “white privilege” and even crying after voting for Joe Biden. In one post from 2018, Krawczyk stated: “This is America where racism is the #1 value our populace seeks to uphold above all…”

The head of Google's Gemini AI everyone.

And you wonder why it discriminates against white people. pic.twitter.com/wyhSmCaowG

— Leftism (@LeftismForU) February 22, 2024

Then he called his Mommy, drank a whole case of soy milk & bing-watched Rachel Maddow pic.twitter.com/B1yV0OqhQr

— Elon Musk (@elonmusk) February 22, 2024

Now, Bloomberg reports that according to sources familiar with the matter, Google is desperately implementing technical fixes in Gemini to reduce racial and gender bias in its outputs but failed to fully anticipate how the image generator could misfire in certain contexts. The company acted quickly to pause the feature on February 23, pending changes to the system.

Google uses an approach called prompt engineering to steer its AI systems away from “problematic” responses. This involves modifying the wording of prompts fed into the model without informing users, such as adding different racial and gender qualifiers.

Laura Edelson, an assistant professor at Northeastern University who has studied AI systems, commented: “The tech industry as a whole, with Google right at the front, has again put themselves in a terrible bind of their own making. The industry desperately needs to portray AI as magic, and not stochastic parrots. But parrots are what they have.”

Others argue AI image generation is inherently limited in depicting history accurately. Since the systems are trained on biased web data, companies try to manipulate outputs to counteract stereotypes, but imperfect training data means fundamental issues remain regardless of safeguards put in place. This obviously backfired in the case of Gemini AI, which portrays viking warriors as black and hispanic to avoid the “stereotype” of Norse vikings.

In an internal memo to employees, Google CEO Sundar Pichai acknowledged that the images and text produced by the company’s Gemini AI have “offended our users and shown bias.” He stated plainly that this is “completely unacceptable” and that Google “got it wrong.”

With Google now on the defensive, some fear it may opt for fewer protections on its AI, which could ultimately be detrimental. For now, the company says it hopes to relaunch the image feature within weeks after making improvements.

Read more at Bloomberg here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship.

COMMENTS

Please let us know if you're having issues with commenting.