China’s state-run Global Times on Thursday demanded a leading role for Beijing in formulating the global rules governing artificial intelligence (AI).

The Chinese paper was correct to believe that AI is something everyone in the world should be concerned about, but neglected to mention that China is one of the big reasons they should be concerned.

China is, without question, the world leader at using artificial intelligence technology to create totalitarian surveillance mechanisms. The Global Times brushes up against this point with amusing delicacy by boasting that facial recognition technology at a Chinese airport recently helped to catch a suspect accused of murdering his mother but studiously avoided mentioning the inescapable surveillance mousetraps created with such technology in places like Xinjiang province.

The alleged murderer caught by airport security was an interesting demonstration of what AI is already capable of. Barely six months after the airport in Chongqing installed a facial recognition system, a fugitive named Wu Xieyu who had evaded the authorities for three years by inventing dozens of false identities strolled into the airport and was identified by computerized cameras in a matter of minutes.

The Global Times argued that China should have a leading role in formulating “AI governance,” a worldwide code of ethics for the use of advanced autonomous computer technology. The paper admitted China does not have a firm set of legal protections for personal information in place but argued no one else in the world really has one yet, and Beijing’s authoritarian government is better positioned than most to impose such a code on giant tech companies:

First, we should pay more attention to AI governance, regarding it as a top priority. We must have a global vision from the very beginning in terms of AI governance and need to actively participate in governance initiatives of international organizations.

Second, relevant government authorities need to change the “development before governance” approach, focusing on governance while developing. Since many AI applications may directly affect public interests, it is necessary to abandon the “black box operation” mode and improve the publicity of relevant projects.

Third, given the difficulties in system construction, we must solve core problems first to address main conflicts. While the establishment of a personal information protection system lags behind, we need to make use of latecomer advantages to create more forward-looking legislation for personal information protection based on trends in AI development.

Last but not least, we have accumulated a lot of problems in internet governance in the past, such as the excessive collection and abuse of personal data on some platforms, the transparency of algorithms, the implementation of a third-party supervision system, the fairness and neutrality of search engines, and anti-monopoly issues regarding the internet. Before the mass application of AI technology takes effect, it is necessary to fix these problems to avoid their further amplification and deterioration.

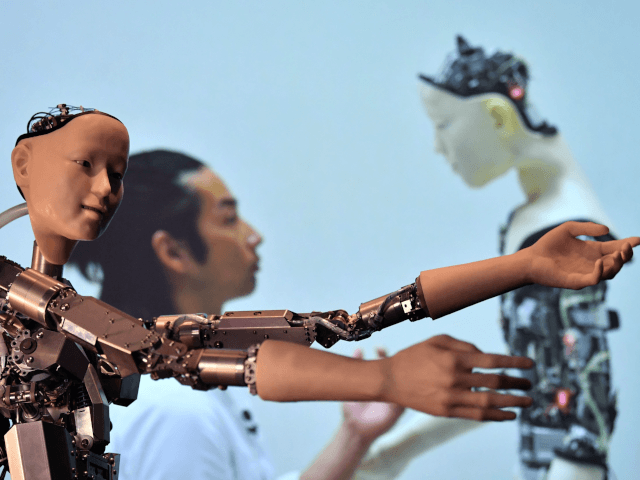

The World Economic Forum is already hard at work putting together public-private councils to hammer out AI governance principles, as is the European Union. Speed is the heart of the problem. AI does not necessarily refer to living, thinking machines, as popular imagination tends to interpret the term. The defining characteristics of AI are speed and autonomy. AI systems work independently without detailed human supervision, which is all but impossible because the machines process so much data at incredible speed.

Human beings spent years trying to catch Wu Xieyu with their Mark I eyeballs. An AI system did it almost instantly by comparing his face, and everyone else’s, with a titanic database of people the authorities were interested in. Wu was nailed with 95 percent accuracy by four different cameras before a human overseer had any decisions to make at all.

Part of the problem is that different nations have different expectations for AI ethics and different standards of privacy. The United States theoretically has the most stringent expectations for privacy and individual autonomy in the world, although Americans have proven far more relaxed about extensive monitoring of their digital activity by both public and private organizations than civil libertarians expected at the turn of the millennium. The U.S. has been inclined to give industry self-regulation a chance before stepping in with federal laws.

The Europeans are a little further down the path of centralized regulation, as exemplified by the 2018 General Data Protection Regulation (GDPR). The GDPR leans far more strongly toward protecting the privacy of individuals than authoritarian regimes like China or Russia consider reasonable. American and European ethicists are worried about how AI could reshape society. Authoritarian states are chomping at the bit to do exactly that. Chinese citizens are currently finding themselves unable to get on board trains because an AI system decided they were poor citizens.

A group called the Organization for Economic Co-operation and Development (OECD) bills itself as the first international effort to establish principles for A.I. governance, with 36 member countries and 6 non-member partners. It has numerous committees and subcommittees covering different aspects of the global digital economy. Its first set of recommendations was published this week, with an endorsement from the U.S. government. China, however, is not a member of the OECD.

COMMENTS

Please let us know if you're having issues with commenting.