There is an ever-increasing rise of deepfake porn, which now makes up 98 percent of all deepfake images, according to an expert. As authorities say there is nothing they can do about deepfakes, the vast majority of AI-generated pornography targets women.

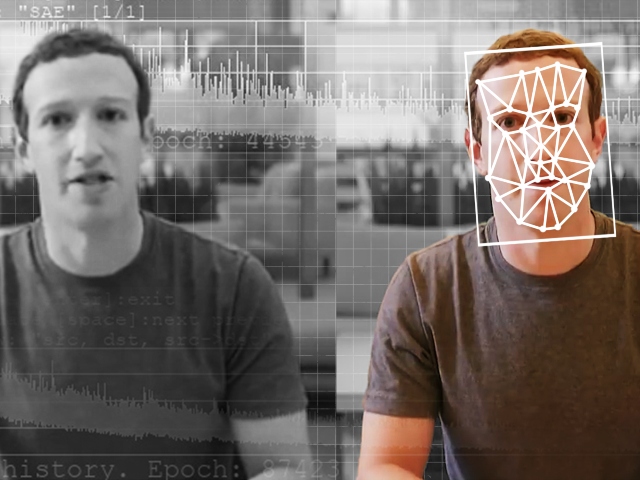

There is an explosion of AI sites and apps that allow users to put the faces of anyone onto images of naked bodies, creating very realistic fake pornography. This is known as a “Deepfake,” and it can be very difficult for people to tell that an image isn’t real.

Porn, a lot of which involves celebrities, makes up 98 percent of all deepfakes, according to a report by security firm Home Security Heroes.

The phenomenon appears to have started in 2017, when a Reddit user uploaded inappropriate, albeit basic, images and videos using the faces of actresses Emma Watson, Jennifer Lawrence, and other female celebrities, according to a report by Daily Mail.

Now, there is a seemingly never-ending supply of websites and apps giving users the tools to generate entirely fake yet very realistic images at the touch of a button.

Deepfake expert and visiting researcher at Cambridge University Henry Ajder told Daily Mail there are two main types of tools, with the most comment one being the so-called “face-swapping” apps, which can be downloaded on Apple and Android app stores.

The second most popular — and more advanced — tool for deepfakes involves AI. One website called “DeepNude” claims that users will be able to “See any girl clothes-less with the click of a button,” Daily Mail reports.

A “standard” package on DeepNude reportedly allows users to create 100 images per month for $29.95, but a monthly $99 will get customers a “premium” package of 420 images.

Betraying the deepfake industry’s overwhelming focus on targeting women, most of these AI tools do not work on images of men. A test by Vice on one of the apps revealed that after uploading a photo of a man, his undergarments were replaced with female genitalia.

When it comes to the dissemination of such porn, roughly 250,000 deepfake videos and images can currently be found online across 30 websites, according to an investigation by Wired. That estimate is also considered very conservative.

Of that figure, 113,000 were reportedly uploaded in the first nine months of 2023 alone, showcasing how fast-growing the deepfake porn phenomenon is.

This is also becoming a problem with regards to younger, school-aged children, given that they now have easy-access porn. Moreover, kids are known to be mean to one another, bullying their peers online and elsewhere.

In October, male students at a New Jersey high school were reportedly caught sharing AI-generated nudes featuring the faces of their female classmates, some of whom were as young as 14.

“Previously, you would have to have had intimate experience with a person to capture that content. Now, you don’t — if you’ve got access to their social media profiles [as many classmates or colleagues do], and there’s lots of pictures of them on there [that’s all you need],” expert Henry Adjer told Daily Mail.

“It doesn’t have to be something that people think is real for it to be traumatic and humiliating,” Adjer added.

You can follow Alana Mastrangelo on Facebook and X/Twitter at @ARmastrangelo, and on Instagram.

COMMENTS

Please let us know if you're having issues with commenting.