Emerging AI capabilities are raising ethical questions around digitally resurrecting deceased loved ones. One expert explains, “It’s really worrying that these new tools might be marketed to people who are in a very vulnerable state, people who are grieving.”

NewScientist reports that AI systems trained on a deceased person’s texts and emails may soon allow creating conversational “ghosts” of lost loved ones. But researchers warn this could seriously impact mental health.

A recent study by Jed Brubaker, an information scientist at the University of Colorado Boulder, highlights the potential risks. Brubaker explains that these AI chatbots could provide comfort and an interactive legacy. However, they also risk preventing healthy grieving by creating dependence and addiction.

“I think some people might see these as gods,” said Brubaker. “I don’t think most people will. You’ll have a group of people who find them just weird and creepy.” Brubaker worries that this extreme attachment could spur new religious movements and beliefs. Brubaker recommends current religions issue guidance on using AI ghosts. He advises researchers proceed cautiously with further developing this application of AI, considering its mental health impact.

Mhairi Aitken, a researcher at the Alan Turing Institute in London, shares Brubaker’s concerns. She warns that marketing AI ghosts to vulnerable grieving people may impede moving on, a crucial part of the healing process. Aitken suggests regulation may be necessary to prevent an AI being created from someone’s data without prior consent.

“It’s really worrying that these new tools might be marketed to people who are in a very vulnerable state, people who are grieving,” said Aitken. “An important part of the grieving process is moving on. It’s remembering and reflecting on the relationship, and holding that person in your memory – but moving on. And there’s real concern that this might create difficulties in that process.”

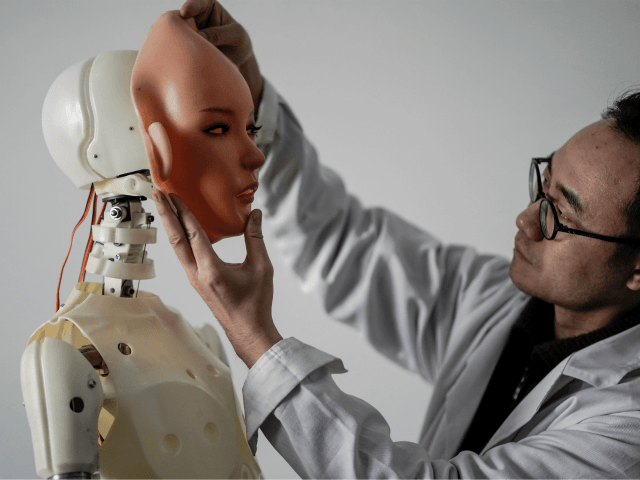

The ability to construct conversational bots replicating specific people is advancing rapidly. AI models like OpenAI’s ChatGPT can already generate remarkably human-like text after training on vast datasets. With enough data, they may soon sound uncannily like an individual person.

Read more at NewScientist here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship.

COMMENTS

Please let us know if you're having issues with commenting.