Facebook could artificially limit the reach of your content even if it doesn’t break the platform’s rules, according to a new post from Mark Zuckerberg that describes how the platform will limit “sensational,” “provocative” and “offensive” content that allegedly comes close to violating rules.

In a lengthy blog post, the Facebook CEO framed the natural preferences of users as a problem. “Left unchecked, people will engage disproportionately with more sensationalist and provocative content,” wrote Zuckerberg.

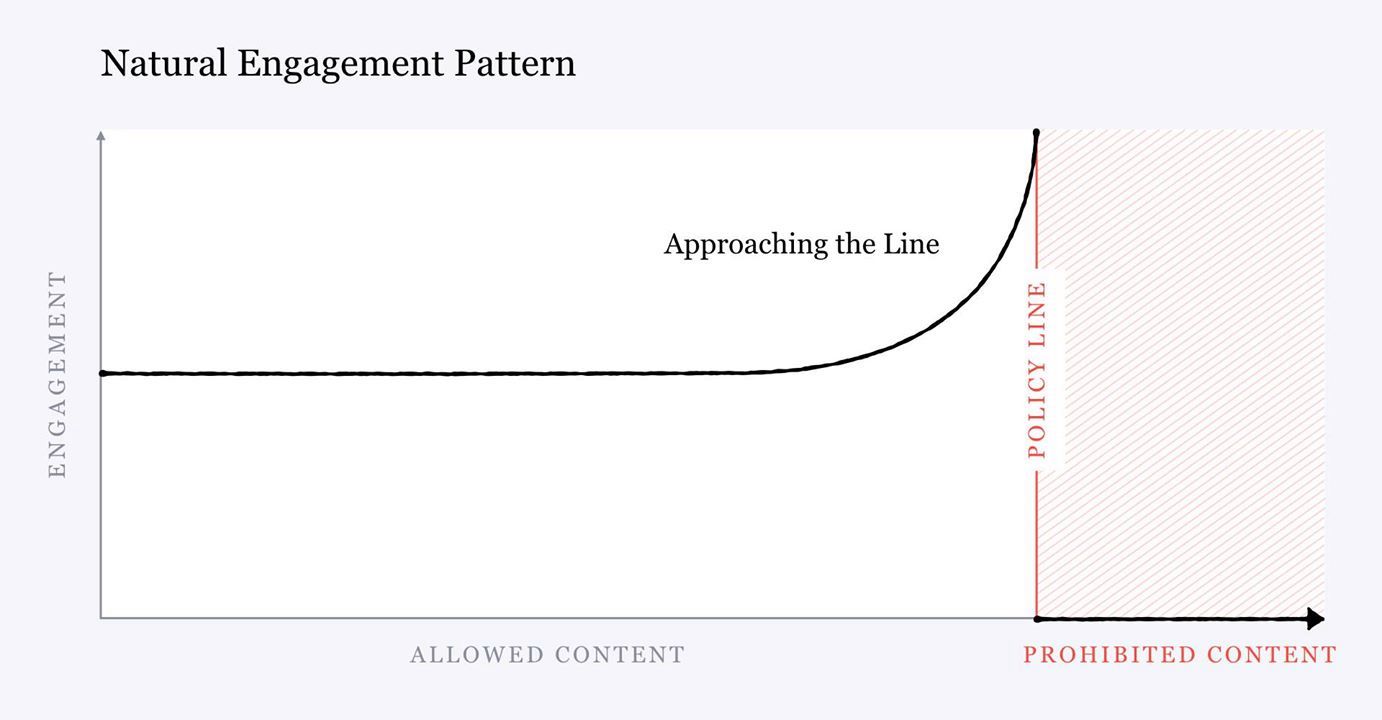

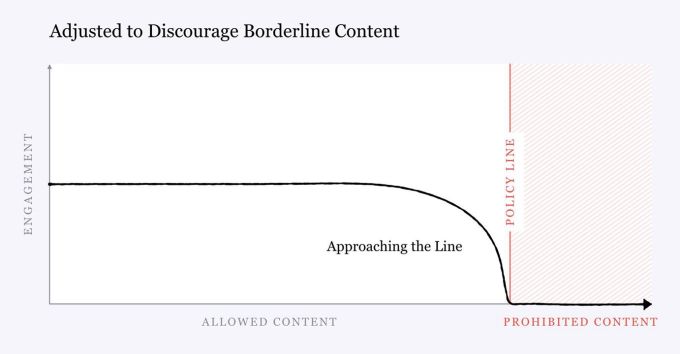

His post included two images that show how Facebook plans to limit the traffic of “sensationalist and provocative content,” that contrast the natural pattern of users’ engagement with posts with the socially engineered pattern that Facebook hopes to achieve.

A crucial distinction between this new announcement and previous ones is that the use of “sensationalist and provocative” does not imply that the material Facebook intends to censor isn’t true or factual. This censorship is purely an outgrowth of Facebook’s worldview, and its determination of what counts as “sensational and provocative.”

Facebook’s own research found that posts which were “offensive” but not “hate speech” ended up getting more views. Zuckerberg admits that such “offensive” posts will have their engagement artificially limited in future.

Interestingly, our research has found that this natural pattern of borderline content getting more engagement applies not only to news but to almost every category of content. For example, photos close to the line of nudity, like with revealing clothing or sexually suggestive positions, got more engagement on average before we changed the distribution curve to discourage this. The same goes for posts that don’t come within our definition of hate speech but are still offensive.

Again, the post revealed little about how Facebook determines the “offensiveness” of content, much less why the company has decided that offensiveness is inherently bad. And offensiveness to whom? Will Christians be protected from offense in the same way that Muslims are?

How Facebook intends to arrive at an objective definition of such words remains a mystery, but it’s clear from the post that machines will be doing the identifying, not humans.

Some categories of harmful content are easier for AI to identify, and in others it takes more time to train our systems. For example, visual problems, like identifying nudity, are often easier than nuanced linguistic challenges, like hate speech. Our systems already proactively identify 96% of the nudity we take down, up from just close to zero a few years ago. We are also making progress on hate speech, now with 52% identified proactively. This work will require further advances in technology as well as hiring more language experts to get to the levels we need.

The goal, according to Zuckerberg’s post, is to socially engineer Facebook users with new incentives. When users are left to their own devices, they prefer allegedly “sensationalist, provocative, and offensive” content that Zucerkberg doesn’t like, so he wants to change that. Users’ old preferences were no good for Facebook, so new ones have to be created.

I believe these efforts on the underlying incentives in our systems are some of the most important work we’re doing across the company. We’ve made significant progress in the last year, but we still have a lot of work ahead.By fixing this incentive problem in our services, we believe it’ll create a virtuous cycle: by reducing sensationalism of all forms, we’ll create a healthier, less polarized discourse where more people feel safe participating.

“We still need to determine exactly what falls in the ‘borderline’ category—and we are gathering external input as part of that process. While we’re still at the early stages of figuring out these types of details, we will communicate publicly what those categories are. To reiterate, people will be able to opt out of this setting.”

Allum Bokhari is the senior technology correspondent at Breitbart News. You can follow him on Twitter, Gab.ai and add him on Facebook. Email tips and suggestions to allumbokhari@protonmail.com.

COMMENTS

Please let us know if you're having issues with commenting.