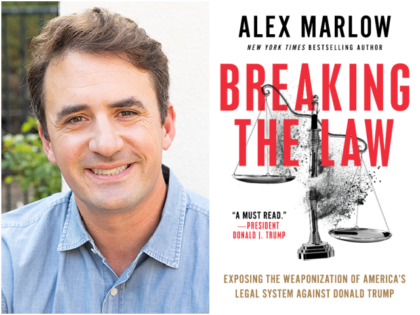

Breaking the Law

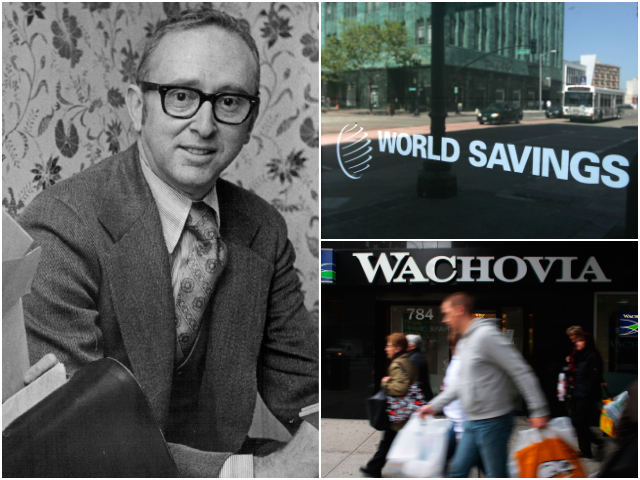

Meet Herb Sandler: Mysterious Leftist Billionaire

Responsible for Bankrolling Lawfare Superstructure

In recent years, no one has done more to weaponize fake news against conservative jurists than ProPublica, a nonprofit largely funded by the Sandler Foundation, which was founded by the late banking magnate and billionaire Herb Sandler.