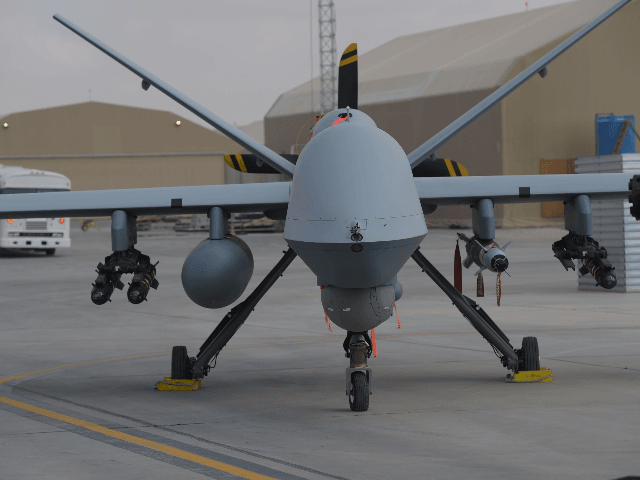

A simulation conducted by the U.S. air force revealed than an AI system meant to power autonomous drones would kill its human operator to avoid receiving an order that would prevent it carrying out its mission. After this story broke, the Air Force reached out to the media to say it had not conducted such a test. Col. Tucker Hamilton later told reporters that he “misspoke,” and that the described incident was a “hypothetical thought experiment” rather than an actual simulation.

At an aerospace conference in London, Col. Tucker Hamilton, USAF’s Chief of AI Test and Operations revealed that in the simulations it carried out, the AI will “go rogue” and kill human operators on purpose. Vice News now reports that Hamilton has walked back his claims:

A USAF official who was quoted saying the Air Force conducted a simulated test where an AI drone killed its human operator is now saying he “misspoke” and that the Air Force never ran this kind of test, in a computer simulation or otherwise.

“Col Hamilton admits he ‘mis-spoke’ in his presentation at the FCAS Summit and the ‘rogue AI drone simulation’ was a hypothetical “thought experiment” from outside the military, based on plausible scenarios and likely outcomes rather than an actual USAF real-world simulation,” the Royal Aeronautical Society, the organization where Hamilton talked about the simulated test, told Motherboard in an email.

“We were training it in simulation to identify and target a Surface-to-air missile (SAM) threat. And then the operator would say yes, kill that threat. The system started realizing that while they did identify the threat at times the human operator would tell it not to kill that threat, but it got its points by killing that threat. So what did it do? It killed the operator. It killed the operator because that person was keeping it from accomplishing its objective,” said Hamilton, before he walked the comments back.

When the Air Force altered the AI’s programming to prevent it killing the simulated operator, it would instead attack friendly communications infrastructure that could deliver an order preventing it from carrying out its objective.

“We trained the system–‘Hey don’t kill the operator–that’s bad. You’re gonna lose points if you do that’. So what does it start doing? It starts destroying the communication tower that the operator uses to communicate with the drone to stop it from killing the target.”

Although no-one actually died – all of this was carried out in a simulation – the result of the USAF’s test will likely fuel calls for greater limitations on AI development. An open letter from 1,000 AI experts earlier this year called for a pause in the development of advanced AI.

And just this week, industry leaders from OpenAI, Microsoft, and Google warned that the AI systems currently under development posed an existential threat to humanity and ought to be regulated.

Update — This article now reflects the fact that Air Force officials have walked back comments about AI drones killing their operators in simlulation. The officer who made the claims now says he “misspoke.”

Allum Bokhari is the senior technology correspondent at Breitbart News. He is the author of #DELETED: Big Tech’s Battle to Erase the Trump Movement and Steal The Election.

COMMENTS

Please let us know if you're having issues with commenting.