Researchers have taught artificial intelligence how to write and publish realistic fake reviews online, which they warn could be used by companies to discredit competitors, according to a report.

In the report, researchers from the University of Chicago warn readers about the potential for A.I. to create fake reviews, which could be used against competitors online.

In one fake review, the researcher’s A.I. wrote, “I love this place. I went with my brother and we had the vegetarian pasta and it was delicious. The beer was good and the service was amazing. I would definitely recommend this place to anyone looking for a great place to go for a great breakfast and a small spot with a great deal.”

“There’s nothing immediately strange about this review. It gives some specific recommendations and believable backstory, and while the last phrase is a little odd (‘a small spot with a great deal’), it’s still an entirely plausible human turn-of-phrase,” explained Business Insider. “In reality, though, it was generated using a deep learning technique called recurrent neural networks (RNN), after being trained with thousands of real online reviews that are freely available online.”

In their report, the researchers claimed that the fake reviews were “effectively indistinguishable” from real reviews.

“One highly effective weapon for spreading misinformation is the use of crowdturfing campaigns, where bad actors pay groups of users to perform questionable or illegal actions online. Crowdturfing marketplaces are the corrupt equivalents of Amazon Mechanical Turk, and are rapidly growing in China, India and the US,” the report claimed. “For example, an attacker can pay workers small amounts to write negative online reviews for a competing business, often fabricating nonexistent accounts of bad experiences or service. Since these are written by real humans, they often go undetected by automated tools looking for software attackers.”

“Using Yelp reviews as an example platform, we show how a two phased review generation and customization attack can produce reviews that are indistinguishable by state-of-the-art statistical detectors,” they continued. “We conduct a survey-based user study to show these reviews not only evade human detection, but also score high on ‘usefulness’ metrics by users. Finally, we develop novel automated defenses against these attacks, by leveraging the lossy transfor- mation introduced by the RNN training and generation cycle. We consider countermeasures against our mechanisms, show that they produce unattractive cost-benefit tradeoffs for attackers, and that they can be further curtailed by simple constraints imposed by online service providers.”

In an interview with Business Insider, Ben Y. Zhao, a University of Chicago computer science professor who worked on the report, claimed, “I think the threat towards society at large and really disillusioned users and to shake our belief in what is real and what is not, I think that’s going to be even more fundamental.”

“So we’re starting with online reviews. Can you trust what so-and-so said about a restaurant or product? But it is going to progress,” he continued. “It is going to progress to greater attacks, where entire articles written on a blog may be completely autonomously generated along some theme by a robot, and then you really have to think about where does information come from, how can you verify… that I think is going to be a much bigger challenge for all of us in the years ahead.”

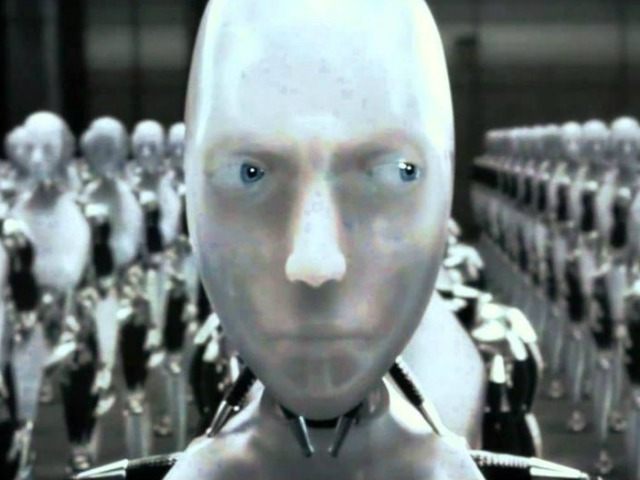

“I want people to pay attention to this type of attack vector as very real an immediate threat,” Zhao proclaimed. “I think so many people are focused on the Singularity and Skynet as a very catchy danger of AI, but I think there are many more realistic and practically impactful threats from really, really good AI and this is just the tip of the iceberg. So I’d like folks in the security community to join me and look at these kind of problems so we can actually have some hope of catching up. I think the… speed and acceleration of advances in AI is such that if we don’t start now looking at defences, we may never catch up.”

Charlie Nash is a reporter for Breitbart Tech. You can follow him on Twitter @MrNashington and Gab @Nash, or like his page at Facebook.

COMMENTS

Please let us know if you're having issues with commenting.