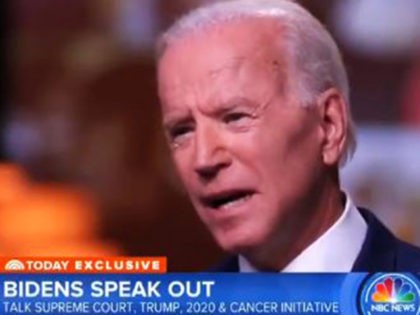

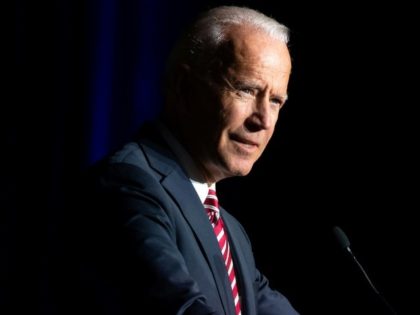

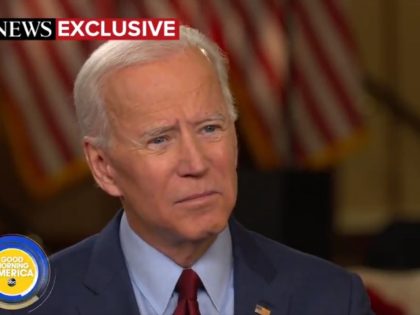

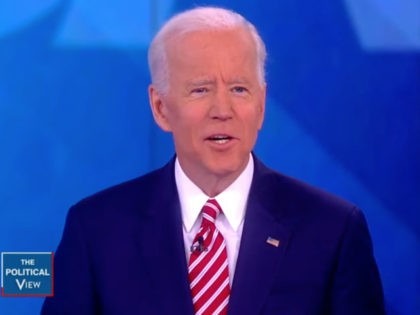

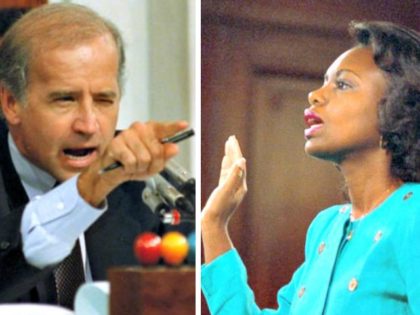

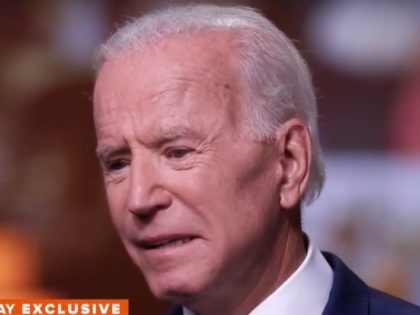

Anita Hill: Not Acceptable for Biden to Just ‘Apologize for the Past’ — Needs Help Stop Sexual Assault

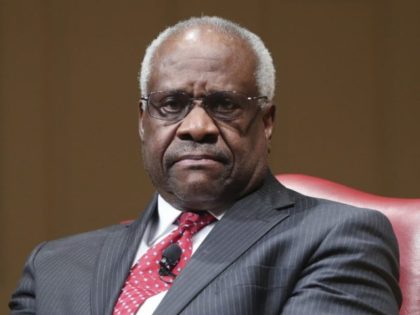

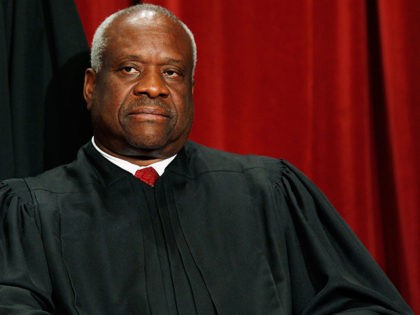

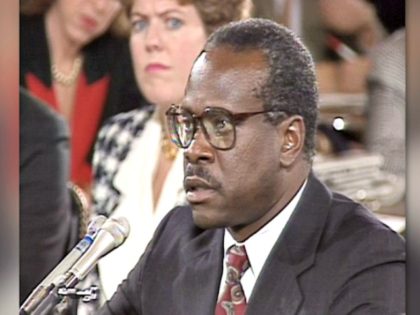

Anita Hill, who once accused Supreme Court Associate Justice Clarence Thomas of sexual harassment during his confirmation hearings in 1991, said on MSNBC’s “TheReidOut” on Friday that President Joe Biden needed to do more than just apologize for the past.