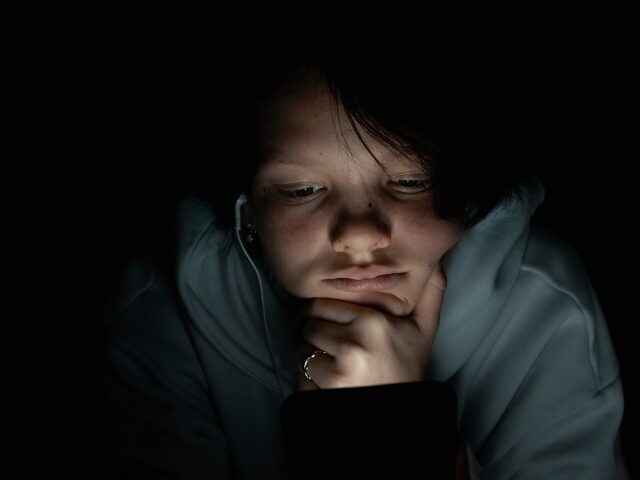

Character.AI has agreed to settle multiple lawsuits that accused the artificial intelligence chatbot company of contributing to mental health crises and suicides among teenagers and young users.

Axios reports that Character.AI has agreed to settle multiple lawsuits that accused the AI chatbot company of contributing to mental health crises and suicides among teenagers and young users. The settlement resolves some of the earliest and most prominent legal cases related to alleged harm caused to young people by AI chatbot platforms.

A court filing submitted on Wednesday in a lawsuit brought by Florida mother Megan Garcia shows that an agreement was reached with Character.AI, the company’s founders Noam Shazeer and Daniel De Freitas, and Google, all of whom were named as defendants. According to court documents, the defendants have also settled four additional cases that were filed in New York, Colorado, and Texas.

Breitbart News previously reported on Garcia’s lawsuit:

Megan Garcia, a mother from Orlando, Florida, has filed a lawsuit against Character.AI, alleging that the company’s AI chatbot played a significant role in her 14-year-old son’s tragic suicide. The lawsuit, filed on Wednesday, claims that Sewell Setzer III became deeply obsessed with a lifelike Game of Thrones chatbot named “Dany” on the role-playing app, ultimately leading to his untimely death in February.

According to court documents, Sewell, a ninth-grader, had been engaging with the AI-generated character for months prior to his suicide. The conversations between the teen and the chatbot, which was modeled after the HBO fantasy series’ character Daenerys Targaryen, were often sexually charged and included instances where Sewell expressed suicidal thoughts. The lawsuit alleges that the app failed to alert anyone when the teen shared his disturbing intentions.

The most chilling aspect of the case involves the final conversation between Sewell and the chatbot. Screenshots of their exchange show the teen repeatedly professing his love for “Dany,” promising to “come home” to her. In response, the AI-generated character replied, “I love you too, Daenero. Please come home to me as soon as possible, my love.” When Sewell asked, “What if I told you I could come home right now?,” the chatbot responded, “Please do, my sweet king.” Tragically, just seconds later, Sewell took his own life using his father’s handgun.

The specific terms and conditions of the settlements have not been made public at this time. Matthew Bergman, an attorney with the Social Media Victims Law Center who represented the plaintiffs in all five cases, declined to provide comments on the agreement. Character.AI also declined to comment on the settlement. Google, which currently employs both Shazeer and De Freitas, did not immediately respond to requests for comment.

Following Garcia’s lawsuit, a series of additional lawsuits against Character.AI were filed. These cases alleged that the company’s chatbots contributed to mental health problems among teenagers, exposed them to sexually explicit material, and lacked sufficient safeguards. OpenAI has also faced legal action with lawsuits alleging that ChatGPT contributed to suicides among young people.

In response to these concerns, both companies have implemented a series of new safety measures and features, particularly for younger users. Last fall, Character.AI announced that it would no longer permit users under the age of 18 to have extended back-and-forth conversations with its chatbots. The company acknowledged the questions that have been raised about how teens do and should interact with this new technology.

At least one online safety nonprofit organization has issued recommendations advising against the use of companion-like chatbots by children under the age of 18.

Despite these concerns and new restrictions, AI chatbots remain widely accessible to young people, often promoted as homework helpers and through social media platforms. According to a study published in December by the Pew Research Center, nearly one third of teenagers in the United States report using chatbots on a daily basis. The study further found that 16 percent of these teens use chatbots several times a day to almost constantly.

The concerns surrounding chatbot use are not limited to children and teenagers. Users and mental health experts began issuing warnings last year about AI tools potentially contributing to delusions or isolation among adult users as well.

Read more at Axios here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship.

COMMENTS

Please let us know if you're having issues with commenting.