Chaos Erupts as Angelinos Disrupt ICE Raids in Downtown Los Angeles

LAPD in Riot Gear Deployed to Assist DHS Agents

Mayor Bass Furious at ICE Agents Enforcing Immigration Laws

’We Are a City of Immigrants!’

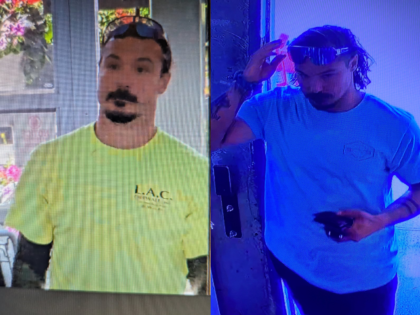

Tense standoffs erupted between U.S. Immigration and Customs Enforcement (ICE) agents and protesters in Downtown Los Angeles.